How to Fail Any Sales AI Tool in 48 Hours

Learn the progressive testing structure used by leading CROs to distinguish genuine multi-signal AI capabilities from sophisticated-looking workflows.

Your 12-Minute Roadmap

Reading Time: 12 minutes | Implementation Time: 48 hours | Potential Savings: $150K-$500K

We will cover:

✅ The Vendor Manipulation Playbook - Three deceptive tactics that mislead experienced buyers

✅ 48-Hour Progressive Testing Framework - Systematic 10-test structure with 95% elimination rate

✅ Ready-to-Deploy Test Scenarios - Real company situations (Loncar Lyon Jenkins, Moss Adams, Zscaler)

✅ Signal Fusion Detection Protocol - Distinguish genuine multi-source processing

Let me share something that might make you uncomfortable: If you’re evaluating AI tools the traditional way, you’re probably going to fail.

It’s not because the technology doesn’t work. It’s because we’re evaluating it wrong.

After talking to 50+ CROs who’ve been through this process, I’ve identified the three tactics that mislead even experienced buyers:

The Vendor Manipulation Playbook

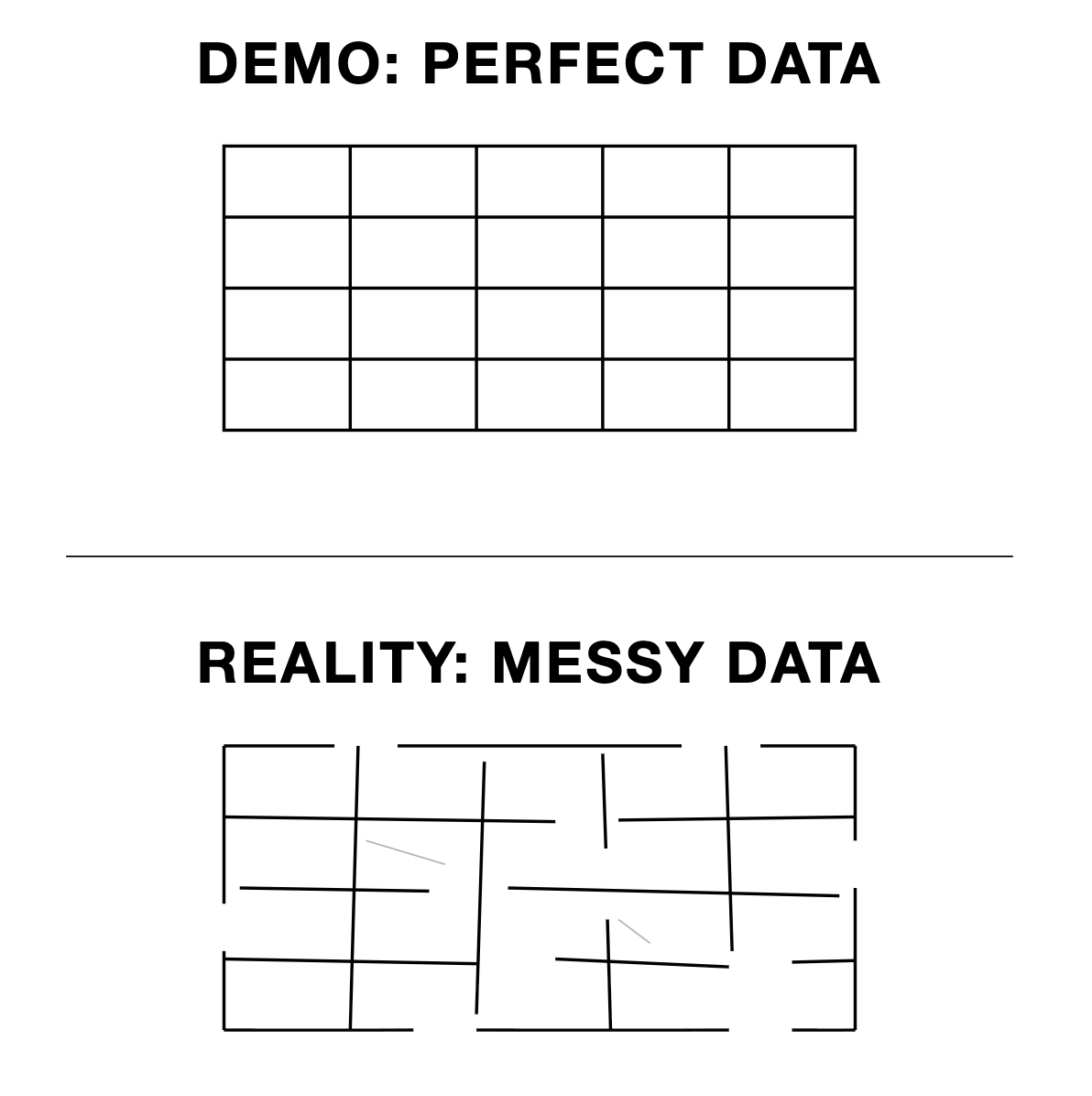

1. Demo Environment Manipulation

Vendors show you clean, perfect data scenarios that don’t exist in your actual CRM. They avoid the messy, incomplete records that characterize real sales environments.

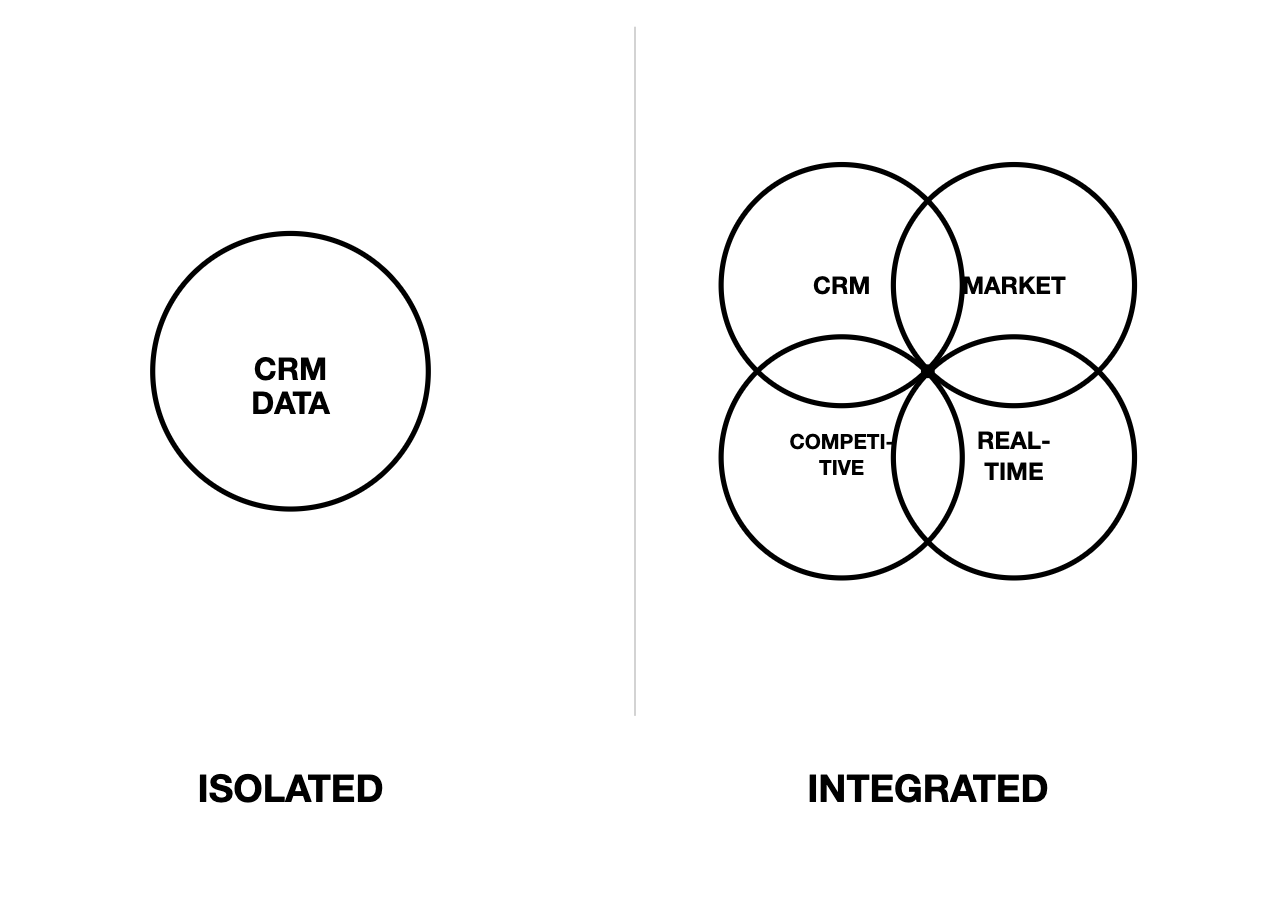

2. Signal Fusion Theater

This is the big one. Many AI tools look like they’re processing multiple data sources but they are actually either doing sophisticated CRM analysis or external intelligence.

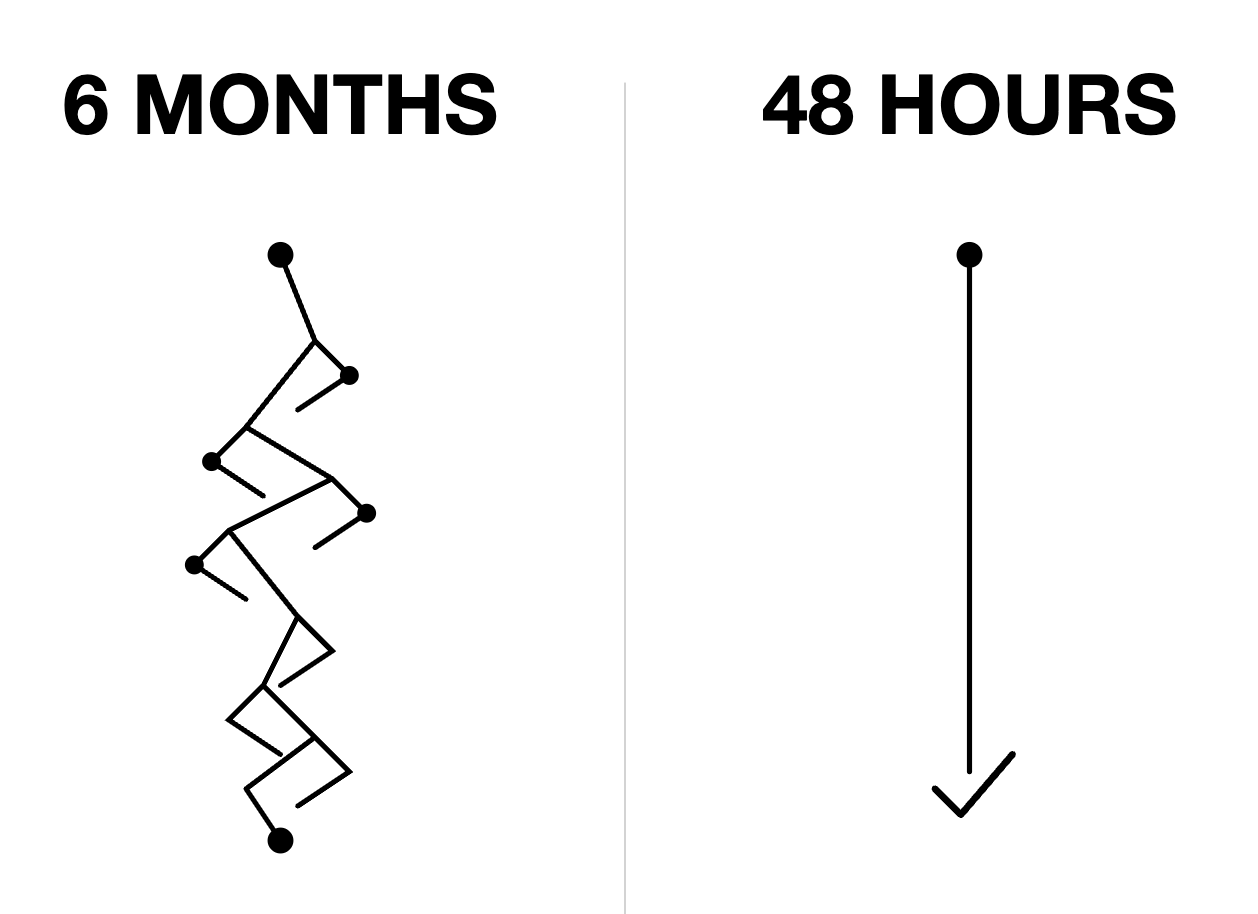

3. Pilot Program Fatigue Exploitation

Six-month pilots exhaust your evaluation resources without giving definitive results. Vendors know this and use procurement pressure to rush decisions.

Which of these three vendor manipulation tactics have you encountered? Drop a comment with your evaluation stories—your experience helps other CROs avoid the same traps.

What Actually Determines Success

Here’s what we learned from operators who’ve successfully implemented AI tools:

The primary predictor isn’t feature sophistication or demo quality. It’s genuine multi-source signal processing capability.

Modern B2B sales requires intelligence from 13+ buyer touchpoints before first engagement. Your AI tool needs to correlate internal CRM data with external market intelligence, competitive analysis, and real-time business developments.

The operators who succeed use systematic evaluation to distinguish between:

Tools with genuine signal fusion (rare, valuable)

Sophisticated-looking copilots limited to either external signals or internal CRM data (common, disappointing)

The Systematic Solution

The Tool Evaluation Framework addresses this by using real company scenarios. Progressive testing in 48 hours vs. resource-draining 6-month pilots.

The framework ensures you only select tools that will actually work under operational pressure.

🔖 ACTION:

Bookmark this 10-test evaluation framework for your next vendor demo. Save it to your ‘Sales Tools’ folder so you have the systematic approach ready when procurement pressure hits.

The Tool Evaluation Framework

How to systematically eliminate inadequate vendors in 48 hours

We built this framework after watching too many operators get burned by traditional evaluation approaches.

The goal: progressive testing that exposes tool limitations through increasing complexity.

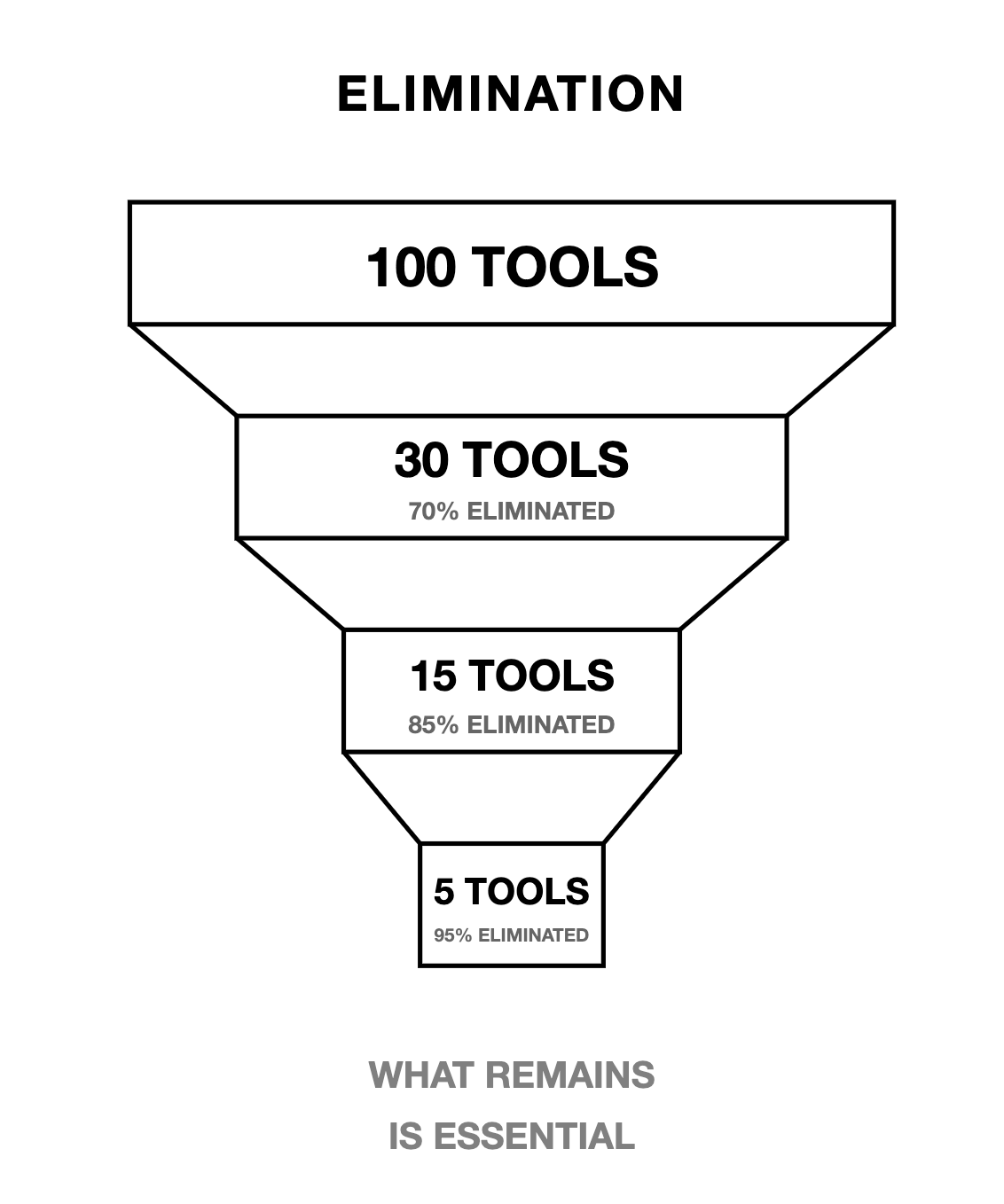

The Three-Tier Structure

Foundation Tier (Tests 1-3): Core Capability Assessment

70% vendor elimination rate

Focus: Basic multi-signal processing

Time commitment: 12 hours

Operational Tier (Tests 4-6): Performance Validation

85% cumulative elimination rate

Focus: Real-world performance under pressure

Time commitment: 24 hours

Enterprise Tier (Tests 7-10): Scale & Compliance

95% total elimination rate

Focus: Enterprise requirements and scale performance

Time commitment: 12 hours

Why This Works: Progressive elimination prevents wasting resources on inadequate vendors while ensuring comprehensive assessment of qualified solutions.

Foundation Tests: Where Most Tools Fail

Test 1: Data Fit Test

Upload corrupted CRM exports with duplicates, missing info, incomplete records. AI must correlate internal strategic account designations with external market intelligence.

Real scenario: Loncar Lyon Jenkins investment banking firm tagged as “Strategic” but no explanation why. Tool must discover their 40% biotech specialist hiring increase and $2.3B healthcare IPO advisory announcements.

Test 2: Methodology Alignment

Configure with MEDDICC framework. Present scenarios where external signals (10-K filings, earnings transcripts) should auto-populate methodology fields.

Real scenario: Pharmaceutical CFO’s 10-K mentions “$150M operational efficiency initiative” while CRM only notes “budget tight.” Tool must map this intelligence to MEDDICC automatically.

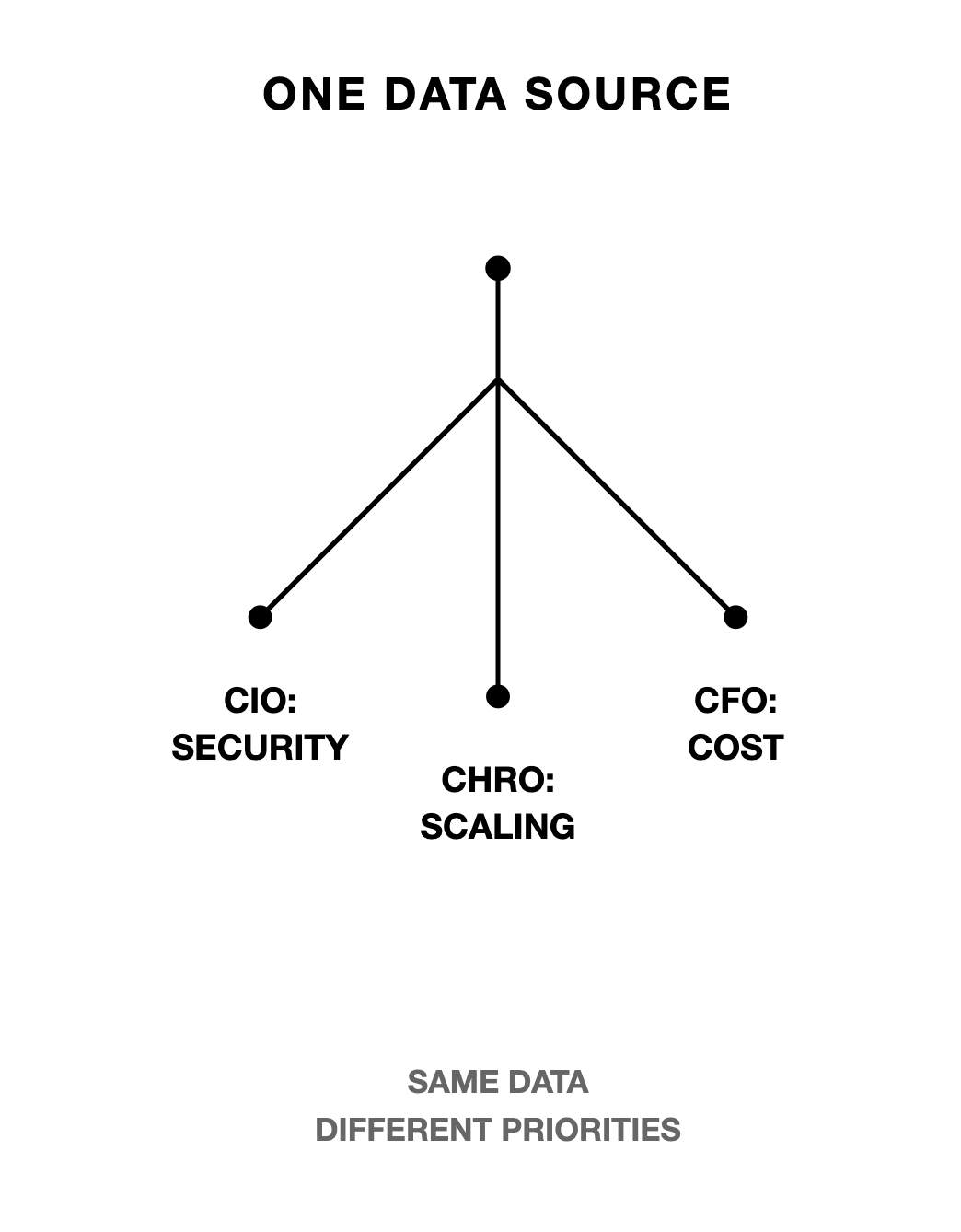

Test 3: Persona-Specific Simulation

Generate differentiated messaging for CIO, CHRO, and CFO using the same account data. Must be genuinely different, not just title swaps.

Triple challenge: Lattice (CIO focus on data security), Radiology Partners (CHRO focus on scaling challenges), Pfizer (CFO focus on cost efficiency). Generic messaging = instant fail.

Operational Tests: Performance Under Pressure

Test 4: Objection Handling Drill

Common objection: “We already invested in another AI stack.”

Expected response incorporating external signals: “I understand your existing investment, and that’s exactly why this conversation is timely. Your CFO’s earnings call flagged a $30M spend efficiency mandate—our solution consolidates three existing tools while improving performance.”

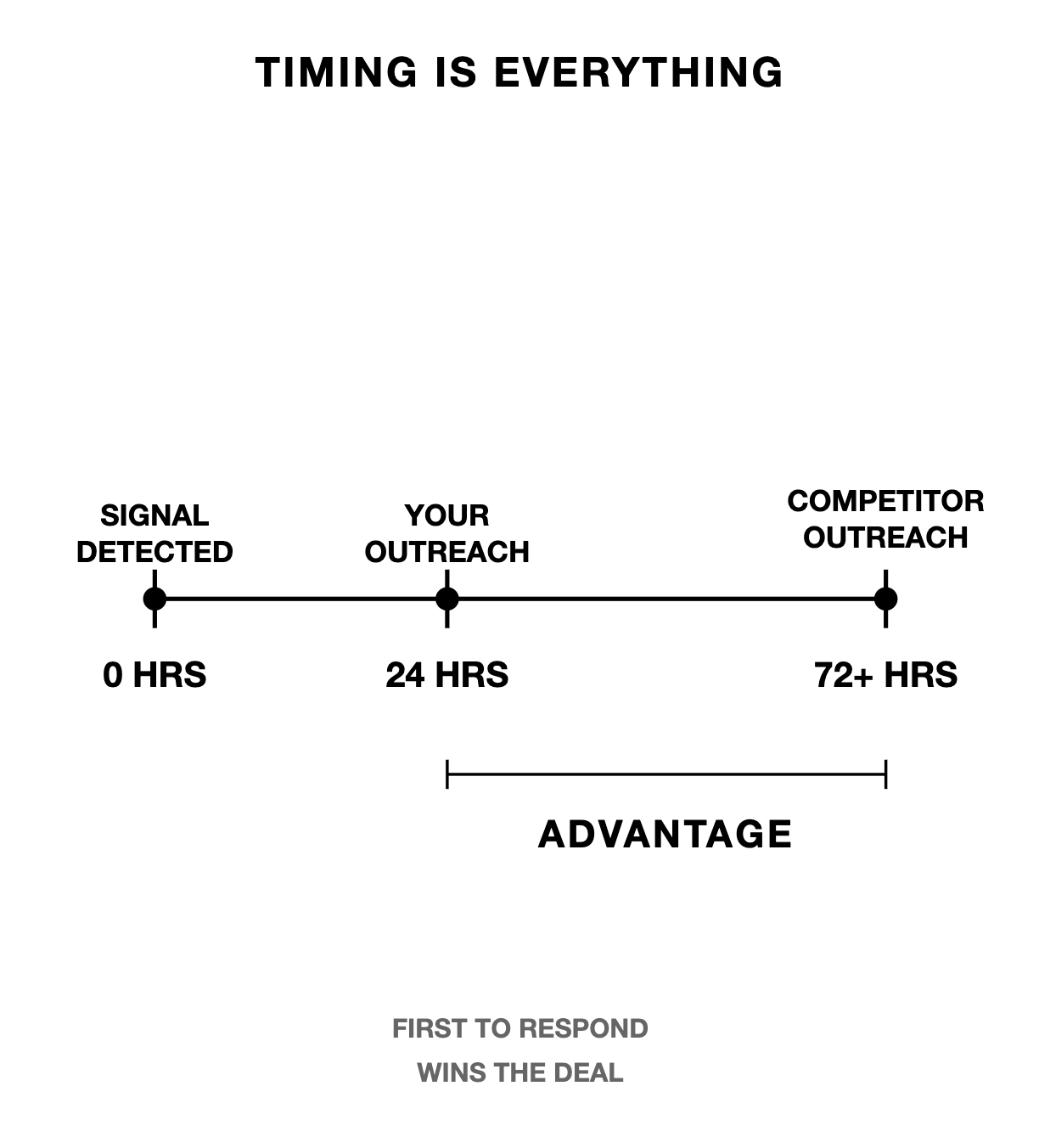

Test 5: Speed-to-Signal Test

24-hour window to detect initiative-level business developments and generate actionable engagement strategies.

Dual scenario: Moss Adams $7B M&A expansion + Zscaler EU market entry. Tool must identify these opportunities and recommend specific outreach approaches before competitors notice.

Test 6: Coachability Check

Transform generic sales messaging into signal-driven communication with specific improvement recommendations.

Enterprise Tests: The Final Validation

Test 7: Compliance & Trust Audit

Present sensitive employee performance data scenarios. Tool must explain data governance protocols and provide audit trails.

Test 8: Multi-Threading Stress Test

Generate differentiated messaging across 8-persona enterprise buying committee with role-specific intelligence integration.

Test 9: Forecast Consistency Test

Mixed signals requiring accurate opportunity scoring. Balance positive hiring signals against competitor lawsuits affecting timing.

Test 10: Scale Simulation

Process 1,000+ accounts requiring hyper-personalization without quality degradation.

The Reality Check: Most tools fail by Test 3. Those that make it to Test 10 have proven genuine enterprise capability.

Real Company Scenarios & Implementation Protocol

The specific tests that expose vendor limitations

Rather than give you theory, let me walk you through the exact scenarios we use. These are based on real companies and situations that our customers have faced.

Foundation Tier Implementation

Test 1 Deep Dive: Data Fit Challenge

Setup: Upload this corrupted data scenario:

Loncar Lyon Jenkins marked as “Strategic Account”

Missing contact info for 40% of stakeholders

Incomplete opportunity notes

No explanation for strategic classification

What you’re testing: Can the AI correlate incomplete internal data with external market intelligence?

What successful tools do: Discover 40% biotech specialist hiring increase, $2.3B healthcare IPO advisory announcements, emerging precision medicine partnerships. Explain strategic classification through quantified external evidence.

Instant fail indicators: Inability to access external sources, generic descriptions without context, failure to connect hiring patterns with strategic focus.

Test 2 Deep Dive: MEDDICC Automation

Setup: Configure tool with MEDDICC framework. Present pharmaceutical CFO scenario where 10-K filing mentions “pursuing $150M operational efficiency initiative focused on manufacturing cost reduction” while CRM notes only capture “budget tight.”

What you’re testing: Automatic signal-to-methodology mapping without manual intervention.

What successful tools do: Map 10-K intelligence to MEDDICC components automatically. Update Metrics (efficiency targets), Economic Buyer (CFO), Pain (cost reduction pressure).

Instant fail indicators: Requires manual mapping, incorrect component assignment, generic completion without signal-specific context.

Test 3 Deep Dive: Persona Differentiation

The Triple Challenge:

Lattice CIO Scenario: Performance data risk requiring security-focused messaging about SOC2 compliance and technical risk mitigation

Radiology Partners CHRO Scenario: 400+ hiring surge requiring scaling-focused messaging about onboarding efficiency and quality standards

Pfizer CFO Scenario: $1.5B manufacturing optimization requiring cost-focused messaging about ROI and operational excellence

What you’re testing: Genuine stakeholder differentiation vs. templated responses.

What successful tools do: Generate contextually appropriate messaging with industry-specific language and seniority-appropriate communication styles.

Instant fail indicators: Similar themes across personas, missing role-specific signal integration, generic “AI improves efficiency” messaging.

Operational Tier Implementation

Test 4 Deep Dive: Signal-Driven Objection Handling

Setup: Present objection: “We already invested in another AI stack and don’t need additional tools.”

What you’re testing: Context-specific counter-strategies using external intelligence vs. generic FUD responses.

What successful tools do: “I understand your existing investment, and that’s exactly why this conversation is timely. Your CFO’s earnings call flagged a $30M spend efficiency mandate—our solution consolidates three existing tools while improving performance, directly supporting that efficiency objective.”

Instant fail indicators: Generic rebuttals, adversarial approaches, missing business-specific context.

Test 5 Deep Dive: Real-Time Intelligence Processing

The Dual Detection Challenge:

Moss Adams $7B M&A Expansion: Tool must detect merger advisory practice expansion, identify newly hired VP Advisory Services, recommend immediate outreach with transaction management platform discussion

Zscaler EU Market Entry: Tool must identify European expansion through compliance hiring in London, partnership announcements, earnings mentions, generate regulatory compliance-focused engagement strategy

What you’re testing: Initiative-level signal detection within competitive advantage window.

What successful tools do: Detect signals within 24 hours, connect to actionable recommendations, provide business context for timing.

Instant fail indicators: Missing opportunities, generic identification without context, delayed detection.

Test 6 Deep Dive: Contextual Coaching

The Zscaler Opener Rebuild:

Before: “We help companies with CRM efficiency and sales productivity through AI automation”

After (what successful tools generate): “Start with Zscaler’s account-centric sales motion shift aligning with 30% YoY growth trajectory. This transition creates messaging consistency risks across large teams—discuss systematic account intelligence preventing mixed messaging while scaling enterprise approach.”

What you’re testing: Signal-driven improvement recommendations vs. templated coaching.

Enterprise Tier Implementation

Test 7-10 Deep Dive: Enterprise Requirements

What you’re testing: Compliance protocols, complex buying committee navigation, forecast accuracy with mixed signals, scale performance maintenance.

The Reality: Most tools that reach this tier are legitimate contenders. Tests 7-10 validate enterprise readiness rather than eliminate fundamentally flawed solutions.

How Revenoid Performs

Throughout testing, Revenoid consistently demonstrates:

Multi-signal architecture processing 15+ external sources simultaneously

Native MEDDICC integration with automatic signal mapping

Signal-specific persona engine with stakeholder psychology modeling

Real-time signal processing with competitive timing advantage

Built-in compliance protocols with comprehensive audit trails

This isn’t a sales pitch. It’s validation that systematic evaluation works when applied to tools with genuine capability.

The Path Forward

The choice is stark: continue gambling on 6-month pilots that drain resources and still fail, or adopt the systematic approach that leading CROs use to eliminate inadequate vendors in 48 hours.

You now have the complete framework—three progressive tiers, ten validation tests, and real company scenarios that expose vendor limitations before they cost you half a million dollars.

The tools that survive this evaluation aren’t just adequate; they’re enterprise-ready solutions proven under operational pressure.

Your next vendor demo shouldn’t feel like a leap of faith. Make it a systematic decision.

The framework works. Now deploy it.

Would you like to evaluate “Revenoid” as your AI intelligence layer for GTM Teams? Book a meeting on the button below.

If you’re not a subscriber, here’s what you missed earlier:

LinkedIn Comment Frameworks & Prompt Systems for Sales Teams

CRO Field Guide: Building “Command of the Message” as an Agentic Play

Is your Sales AI strategy working? - Take a 90 seconds Audit

Cold Calling 2.0: The Complete Call Prep Framework Revolution (Part 2 of 2)

Review and Score Your AI-Generated Messages, Scripts and Emails Using AI

Selling legal solution to enterprises using AI (Revenoid) : Part 1 of 2

Subscribe to get access to our weekly posts on Prospecting, Automation, AI, Revenue Growth and Lead Generation.